AI Cyber Security Q&A with Pragatix

- Niv Nissenson

- Nov 24, 2025

- 6 min read

AI adoption in the enterprise is accelerating, but so are the concerns around it. Across nearly every industry survey, security consistently ranks as a top-three concern. Leaders want the productivity and innovation AI unlocks, but not at the cost of exposing sensitive data, violating compliance requirements, or creating new attack surfaces.

That’s why I’ve been spending more time exploring the emerging category of AI security, solutions that allow companies to safely use GenAI without compromising on privacy, governance, or control.

One company doing meaningful work in this space is AGAT Software. AGAT is a long-standing leader in enterprise security and compliance, with more than a decade of experience providing Unified Communications (UC) compliance solutions to global clients, including 25+ Fortune 500 organizations operating in highly regulated environments.

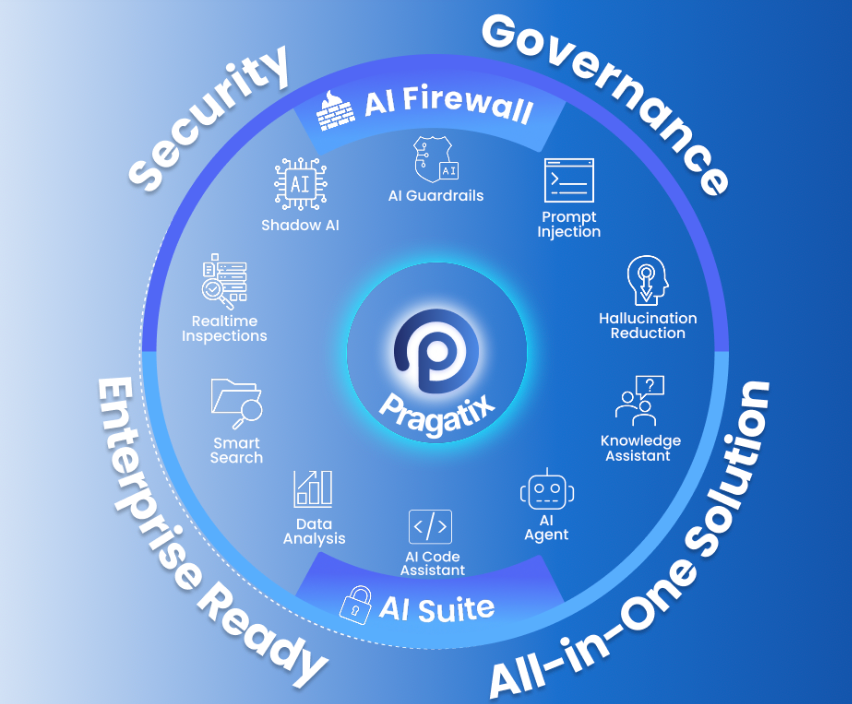

Their newest product, Pragatix, is designed specifically for the AI era: a platform that enables the use of Generative AI across the enterprise while ensuring that data remains private, protected, and fully governed. Pragatix helps organizations embrace AI confidently by enforcing security controls, preventing data leakage, and giving companies the transparency they need around how AI is being used.

I recently met Yoav Crombie, CEO of AGAT, at a startup event, and given the company’s deep expertise in AI security, I took the opportunity to dive deeper. The result is a practical and insightful Q&A on the risks, realities, and best practices for secure AI adoption, along with an inside look at how Pragatix is helping enterprises move forward safely.

Q&A

TheCFOAI: Enterprises are rapidly adopting generative AI, but security and governance concerns remain front and center. How do you see the balance evolving between AI innovation and the need for data protection inside large organizations?

Yoav Crombie: AI’s business value is clear—but so are the risks. The next phase of enterprise AI is not just adoption, but controlled innovation.

Security leaders increasingly understand that saying “no AI” doesn’t work. Employees will use it anyway, which only pushes usage underground and creates a higher risk. A better approach is “don’t say no—say how.”

Enterprises now want to give employees access to powerful tools like ChatGPT or Copilot with proper guardrails, governance, and visibility.

That’s exactly why the Pragatix platform offers two complementary approaches:

AI Firewall

For companies who want employees to safely use public AI tools. It gives visibility, policy enforcement, and data-flow control.

Private AI Suite

For companies who prefer to bring AI inside the organization with an isolated, secure, on-prem or private-cloud environment.

Together, these approaches let organizations innovate with AI without losing control of their data.

TheCFOAI: Many executives still think of cybersecurity in terms of network and endpoint threats. What new types of risks are emerging now that AI systems can access internal data and make autonomous decisions?

Yoav Crombie: Traditional security tools were never built for AI. Some risks—like data leakage—aren’t new, but the scale, speed, and accessibility of AI make them far more severe.

New and evolving risks include:

AI-driven data exposure — an employee can upload a sensitive document to an AI tool without understanding the implications, exposing data far beyond its intended audience.

Response manipulation risks — most tools inspect data going into AI, but almost no one inspects what comes out. Altered outputs can mislead users or drive harmful decisions.

Single point of failure — AI systems often have access to all corporate knowledge. If someone bypasses safeguards, the potential impact is massive.

AI agent risks — unlike chatbots, agents can take actions: query databases, update systems, initiate workflows. If manipulated, they can cause significant operational harm.

Ethics and toxicity — biased or harmful outputs can influence hiring, legal decisions, or customer interactions.

User intent as a new risk layer — the same document uploaded for spell-checking carries a different risk profile than uploading it for legal analysis or financial forecasting.

The Pragatix AI Firewall addresses these challenges by analysing prompts and responses, classifying data sensitivity, understanding user intent, and blocking or flagging risky interactions.

TheCFOAI: “Shadow AI” use—employees relying on unsanctioned AI tools—is becoming a real compliance problem. How serious is this issue, and how can enterprises regain visibility and control without slowing down innovation?

Yoav Crombie: It’s extremely serious—and extremely common. Microsoft research shows 71% of employees use unapproved AI tools at work.

Banning AI doesn’t solve the problem; it only drives usage underground, increasing the risk.

The better approach is monitoring and governed enablement, not restriction.

Pragatix AI Firewall gives full visibility into:

who is using AI

what they are using it for

the sensitivity of the data involved

what the user intends to do with the AI

This allows organizations to:

allow low risk use cases

restrict or block high-risk ones

prevent exposure of sensitive or regulated information

monitor emerging risk patterns, including AI agent activity

It brings AI out of the shadows and into a secure, governed framework

Inside the Pragatix Platform

TheCFOAI: Pragatix offers both an AI Firewall and an AI Suite. Can you explain how these two solutions complement each other—what problems each one solves and how they fit into an enterprise AI strategy?

Yoav Crombie: The two solutions form a unified defense and enablement architecture for enterprise AI.

The AI Firewall protects organizations when employees use public AI tools. Originally designed for external AI governance, many customers now also deploy the Firewall for their private, internal LLMs. This is because internal AI often has access to the company’s most sensitive data, and customers want the same guardrails for both public and private AI usage.

The Private AI Suite is a complete on-prem or private-cloud platform with ready-to-use capabilities, including knowledge chat, enterprise search, natural-language database analysis, code assistance, AI agents, and secure connectors to internal systems. It is built for enterprise readiness and requires minimal setup, enabling organizations without dedicated AI teams to deploy advanced AI capabilities quickly.

Together, these solutions provide:

• safe and governed use of public AI

• sovereign internal AI intelligence

• unified governance and auditing across all AI interactions

Few vendors provide both a standalone AI security layer and a full-featured private AI platform. Pragatix uniquely offers a single ecosystem for both AI security and AI productivity.

TheCFOAI: The AI Suite emphasizes local, private deployment and zero data exposure. Why is on-prem or controlled-cloud deployment so important today, and what kinds of organizations benefit most from this approach?

Yoav Crombie: Many organizations want AI—but not at the cost of losing control of their data.

On-prem or private AI is essential when:

data is highly sensitive (financial records, customer data, legal documents)

regulations or contracts prohibit sharing data with external cloud services

the organization operates with no internet access or requires full air-gapped systems

Connecting internal databases to external AI services is simply too risky

enterprises want an enterprise-ready solution, with minimal setup and all AI services immediately available

As AI becomes a central decision-making engine, enterprises need data sovereignty, strong permission controls, and safeguards to ensure the AI never outputs information it shouldn’t.

Pragatix Private AI gives organizations complete control over their AI environment, enabling powerful intelligence without compromising privacy.

The Future of Trustworthy AI

TheCFOAI: Looking ahead, what does a “secure and compliant” AI ecosystem look like to you—one where companies can confidently use AI without compromising security or ethics?

Yoav Crombie: The future of enterprise AI is governed, auditable, hybrid, and agent aware.

Key elements include:

strong oversight of AI agents, which introduce new operational risks

unified governance across public and private AI usage

granular permission controls ensuring the AI never reveals data a user isn't allowed to see

enterprise-grade visibility into who uses AI, why, and with what data

automated policy enforcement that prevents risk before it happens

Trustworthy AI isn’t about choosing between innovation and security.It’s about maturing both together—and that’s exactly the gap Pragatix is built to fill.

TheCFOAI: Many companies want to adopt AI but don’t have a dedicated AI team. How does Pragatix support organizations that need an enterprise-ready, low‑effort deployment?

Yoav Crombie: The Private AI Suite is fully enterprise-ready with prebuilt search, knowledge chat, database analysis, code assistance, agents, and connectors—requiring minimal internal setup.

TheCFOAI: Many vendors are offering either AI security or AI applications. What makes Pragatix different?

Yoav Crombie: Pragatix is one of the few platforms that provides both a standalone AI Firewall and a full Private AI Suite, giving enterprises unified governance and intelligence within a single ecosystem.