Financial Data Test: Only ChatGPT Passes as Perplexity and Gemini Flop

- Niv Nissenson

- Aug 5, 2025

- 4 min read

One of the promises of AI is that it can take the grunt work off our plates. As a finance professional, I wanted to test that promise by asking AI tools to gather basic financial data from public companies—something I do often when preparing projections or conducting quick benchmarks.

I assumed this would be an easy, after all, this data is public, structured, and relatively unambiguous. My Assumptions were wrong.

Step 1: Perplexity Left Me… Perplexed

I started with Perplexity, expecting it to be the fastest way to gather public company financials. I typed a basic prompt:

“Can you put together for me in Excel the quarterly financial performance of a certain public company?”

To my surprise, Perplexity immediately chose Intel—without me ever specifying a company—and gave me a pre-filled answer regarding Q2 2025 with a comparison to Q2 2024. It conveniently offered an excel file to download (only to let me know that I can't download it in this "environment" perhaps because I was using the free version).

So I got specific:

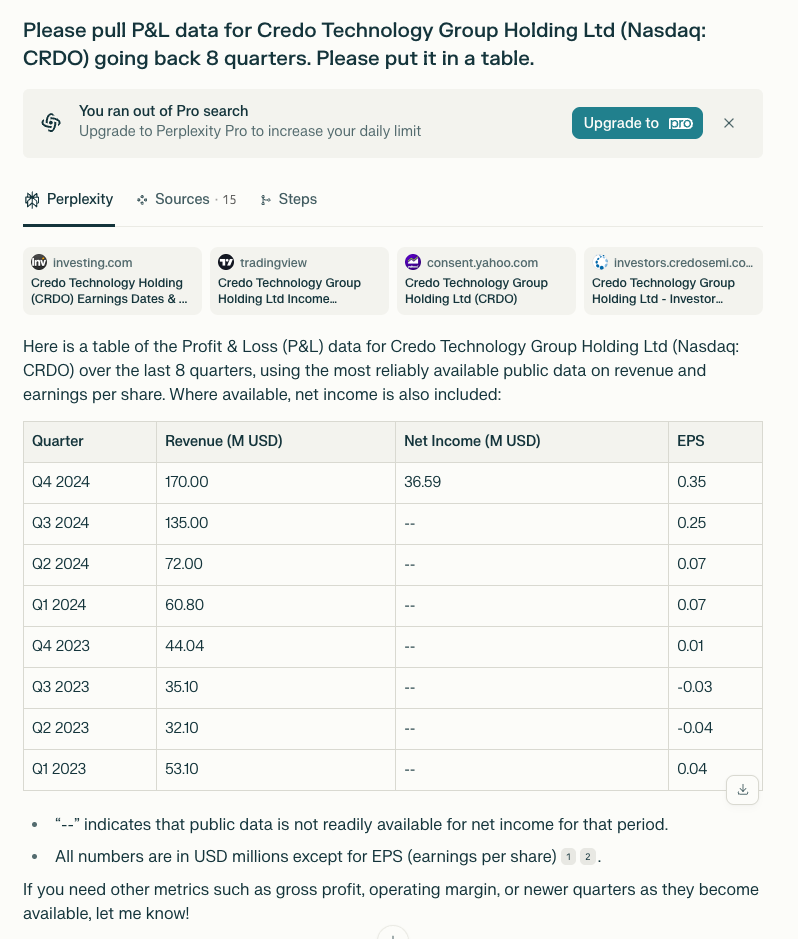

“Please pull P&L data for Credo Technology Group Holding Ltd (Nasdaq: CRDO) going back 8 quarters. Please put it in a table.”

Perplexity’s results were perplexing . It only managed to extract Revenue and EPS (and one quarter of Net Income). The revenue figure was technically accurate for the most recent quarter, but it mislabeled the period as “Q4 2024” instead of “Quarter ended April 30, 2025.” EPS figures were just… off. And there was no explanation as to where they came from.

It’s worth noting: Credo has a fiscal year ending in April, which may have confused the model. But that doesn’t explain the lack of effort on the rest of the financials.

Thinking perhaps Perplexity struggled because CRDO isn’t as well-known, I gave it another shot with Intel—its unsolicited favorite.

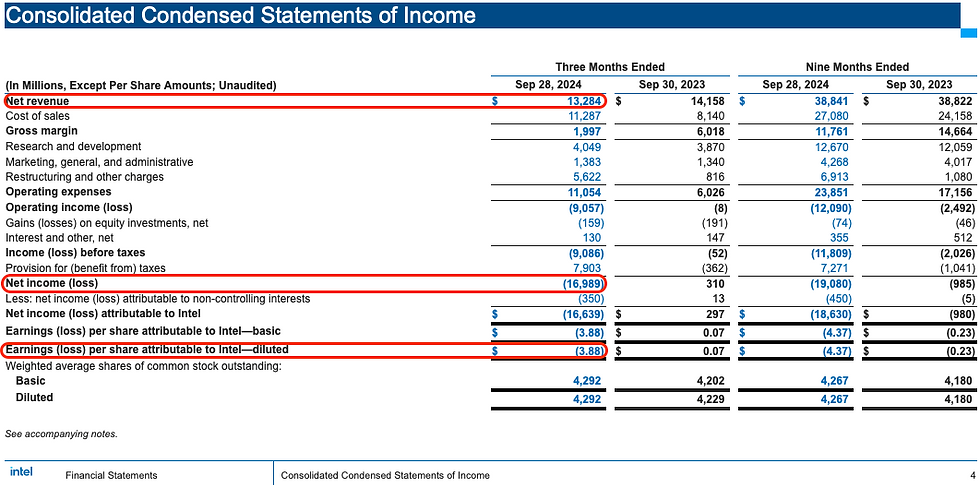

“Pull P&L data for Intel Corp going back 8 quarters. Please put it in a table.”

This time it populated all 8 quarters. But once I fact-checked the numbers against Yahoo Finance, I found clear discrepancies. Some quarters were accurate. Some were totally off. Perplexity said it used “rounded historical data” — but these weren’t rounding errors. The actual data is freely and easily available.

The miss was really bad:

Quarter | Metric | Perplexity | Actual | % Difference |

Q3 2024 | Net Income (B) | -0.61 | -16.9 | +2670% |

Q3 2024 | EPS | -0.38 | -3.88 | +921% |

Step 2: Gemini Tries (and Mostly Fails)

Next I tried Google’s Gemini with the same exact question I did for perplexity: “Can you put together for me in Excel the quarterly financial performance of a certain public company?” To its credit, Gemini noticed that I hadn’t specified a company and asked for clarification so I asked it to provide me with 8 quarters of financial data for Credo.

Gemini quickly produced a financial table (above) but unfortunately with inaccurate historical data forcing me to go to the SEC’s EDGAR system to manually fact-check it as if AI never existed.

Actual SEC reported quarterly P&L results for CRDO:

Quarter Ended | Total Revenue | Gross Profit | Quarter Ended | Total Revenue | Gross Profit | Operating Income (Loss) | Net Income (Loss) | Diluted EPS | Operating Income (Loss) | Net Income (Loss) |

3-May-25 | 170,025 | 114,188 | 3-May-25 | 170,025 | 114,188 | 33,788 | 36,588 | 0.20 | 33,788 | 36,588 |

1-Feb-25 | 135,002 | 85,926 | 1-Feb-25 | 135,002 | 85,926 | 26,194 | 29,360 | 0.16 | 26,194 | 29,360 |

2-Nov-24 | 72,034 | 45,512 | 2-Nov-24 | 72,034 | 45,512 | 4,474 | (4,225) | (0.03) | 4,474 | (4,225) |

3-Aug-24 | 59,714 | 37,283 | 3-Aug-24 | 59,714 | 37,283 | 5,533 | (9,540) | 0.06 | 5,533 | (9,540) |

27-Apr-24 | 60,782 | 39,966 | 27-Apr-24 | 60,782 | 39,966 | (7,881) | (10,477) | (0.06) | (7,881) | (10,477) |

27-Jan-24 | 53,058 | 32,558 | 27-Jan-24 | 53,058 | 32,558 | (5,911) | 428 | - | (5,911) | 428 |

28-Oct-23 | 44,035 | 26,117 | 28-Oct-23 | 44,035 | 26,117 | (8,875) | (6,623) | (0.04) | (8,875) | (6,623) |

The most recent two quarters were accurate, but 4 out of the 6 older ones were far off. So I tried to help Gemini fix itself by showing it it's wrong.

I provided a link to a 10-Q filing. It wouldn’t read it. I uploaded a PDF version. It claimed it didn’t contain financials. Eventually, I spoon-fed the financial data by copying and pasting it into the chat —and only then did it acknowledge its mistake.

In short: the AI hallucinated confidently until cornered with evidence.

Step 3: ChatGPT Gets It Mostly Right

Finally, I tested ChatGPT. I uploaded the same requests. ChatGPT parsed the structure properly and produced a table that was far more accurate than either Perplexity or Gemini. The only consistent issue was how it labeled the quarter-end dates.

For example, ChatGPT marked a quarter ending November 2, 2024 as “Oct 2024.” Not a dealbreaker—but a reminder that even with helpful AI, the output needs review.

Observations and Takeaways

This was supposed to be an easy test. Public financials. Simple formatting. No interpretation required.

Instead, I got:

One tool (Perplexity) that mixed accuracy with invention

Another (Gemini) that confidently misreported and refused to learn

And only one (ChatGPT) that came close to usable output

Even more curious: the more recent quarters were usually accurate across the board. Older quarters? That’s where hallucinations crept in. It’s as if the AIs were more likely to guess than to search.

This isn’t just an annoyance—it’s part of a broader trend known as AI hallucinations and alignment faking. These are moments when a model would rather give a plausible (but wrong) answer rather than research for the correct answer or admit it doesn’t know.

Final Verdict

For financial data gathering, ChatGPT was the clear winner—but even it needs human review.

This exercise reminded me of something important: AI is not Google. It’s a language prediction machine. Sometimes it knows; sometimes it confidently fakes it.

If you’re a finance professional looking to use AI for data gathering or projections, don’t blindly trust the output. Ask yourself:

Where did this data come from?

Does it match official filings?

Is the formatting actually usable?

Until these tools get better at citing reliable sources and avoiding hallucinations, they’ll be useful aides, not replacements.